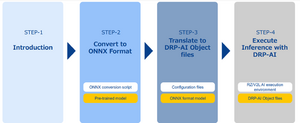

DRP-AI Translator

DRP-AI Reserved Memory

The DRP-AI Reserved memory is required for AI Inference processing. The memory areas is where the AI model binary, weights, bias, parameters, activations, and configuration files are located.

DRP V5.00 to v7.00

The latest version of the DRP library moved the reserved memory start address. Due to this change DRP-AI Applications that worked on DRP version 5.00 ( BSP v1.00 ) will result in errors with the latest DRP-AI drivers.

To resolve the issue regenerate the DRP-AI models using the DRP-Translator. First modify the Address YAML configuration file to point to the new reserved memory.

DRP-AI Translator Address Map YAML

The configuration file only requires the two modifications

- Use the following template below

name: "ALL"

addr: 0x80000000

lst_elemsp:

- { name: "data_in"}

- { name: "data"}

- { name: "data_out"}

- { name: "work"}

- { name: "weight"}

- { name: "drp_config"}

- { name: "drp_param"}

- { name: "desc_aimac"}

- { name: "desc_drp"}

- Enter the Address to the DRP-AI Reserved Memory. For RZV2L this would be

addr: 0x70000000

- After the DRP-AI Translator is run a memory map is generated. This information shows how much memory the translated AI model will need.

DRP-AI Translator Pre/Post Processing YAML

DRP-AI Implementation Guide

Renesas Provides Implementation Guides for Renesas Implemented AI Models can be found here. These guides show how to convert pyTorch AI model to ONNX Format, Use the DRP-AI Translator, and finally Execute the DRP-AI translated mode on the target board.